What is AgentLoop?

AgentLoop is an autonomous compound AI development platform built on spec-driven development principles. Rather than interacting with a single AI assistant, developers write structured specifications and AgentLoop’s orchestrator decomposes them into AGILE-style tasks with clear acceptance criteria. These tasks are assigned to specialized agents---product-manager for planning and decomposition, engineer for implementation, qa-tester for automated verification---that execute in coordinated pipelines with no human input required. The system runs 24/7, manages task dependencies, maintains project context through a memory system, and integrates with MCP (Model Context Protocol) tools for schema awareness, component documentation, and real-time verification. The result: autonomous AI development that scales beyond single-turn conversations to multi-day, multi-layer projects---working through the night while you sleep.

The Approach

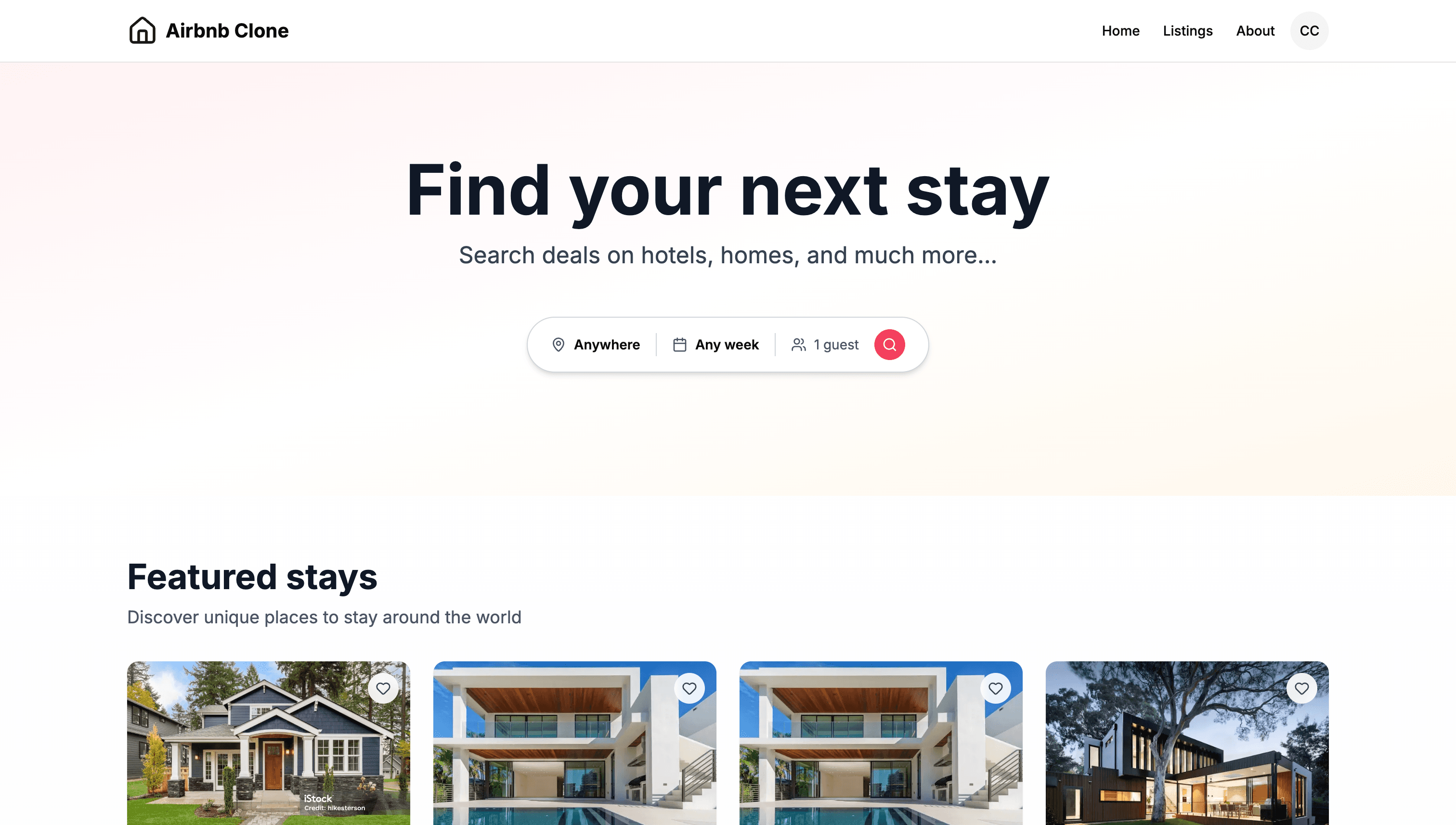

This case study examines how AgentLoop transformed three detailed prompts into a full-stack vacation rental platform. The key insight: each prompt described a layer of the application, and each layer built on the previous one.

This is not “vague prompt in, magic out.” Each prompt was detailed about what to build, and agents used MCP tools to verify their work against the actual codebase.

The Three Prompts

Prompt 1: Database Layer

“Build the Supabase database schema for an Airbnb clone. Use Supabase CLI to initialize the project with

supabase initand create all migrations in /supabase/migrations/, then usesupabase db pushto apply migrations andsupabase gen types typescriptto generate TypeScript types after schema is complete. Use Supabase MCP to inspect the database schema, verify tables and relationships are created correctly, test RLS policies with different user contexts, and validate that database functions return expected results. Create migrations for all core tables including users extending Supabase auth, listings with location and pricing, listing images, amenities as a many-to-many relationship, availability for date-specific pricing and blocking, bookings with status tracking, reviews with category ratings, and favorites. Add appropriate indexes for common queries. Set up RLS policies ensuring users can only modify their own data while listings and reviews remain publicly readable. Create database functions for checking availability, calculating booking totals, and aggregating ratings. Seed common amenities and set up storage buckets for images.”

What this prompt specifies:

- Tooling: Supabase CLI commands, MCP for verification

- Tables: users, listings, images, amenities (many-to-many), availability, bookings, reviews, favorites

- Security: RLS policies with specific read/write rules

- Optimization: indexes for common queries

- Functions: availability checking, booking totals, rating aggregation

- Infrastructure: storage buckets for images

What this prompt does NOT specify:

- SQL syntax

- Exact column definitions

- Index implementation details

- Migration file structure

The prompt describes what the database should contain and what it should do. The how is left to the agents.

Prompt 2: Backend Layer

“Build the Next.js 14 backend for an Airbnb clone using the existing Supabase schema. Use Supabase MCP to query the database schema for reference when building TypeScript types and to test queries during development, and to verify RLS policies work correctly with the server actions. Use Supabase CLI to regenerate TypeScript types with

supabase gen types typescript --local > types/database.tsif any schema changes are needed. Set up Supabase client utilities for server and client usage with proper cookie handling and middleware for auth session refresh. Create TypeScript types matching the database schema. Build Zod validation schemas for all inputs. Implement server actions for listings CRUD with image uploads, bookings with availability checking and Stripe payment intents, reviews restricted to completed booking guests, favorites toggling, and user profile management. Add route handlers for OAuth callback, Stripe webhooks to update booking status on payment events, and a search endpoint with filtering for location, dates, guests, price range, amenities, and map bounds.”

Critical phrase: “using the existing Supabase schema”

This prompt explicitly builds on Prompt 1. It assumes the database layer is complete. It references:

- Type generation from the existing schema

- RLS policies that already exist

- Tables that are already created

What this prompt specifies:

- Framework: Next.js 14 with server actions

- Client setup: cookie handling, middleware for auth refresh

- Validation: Zod schemas for all inputs

- Server actions: listings CRUD, bookings with Stripe, reviews, favorites, profiles

- Route handlers: OAuth callback, Stripe webhooks, search API

- Search capabilities: location, dates, guests, price range, amenities, map bounds

What this prompt does NOT specify:

- File structure

- Function signatures

- Error handling patterns

- Stripe API integration details

Prompt 3: Frontend Layer

“Build the Next.js 14 frontend for an Airbnb clone using Shadcn UI and the existing backend. Use Shadcn CLI to initialize with

npx shadcn@latest initselecting neutral color scheme and CSS variables, and usenpx shadcn@latest add [component]to add components as needed throughout development. Use Shadcn MCP to look up component APIs, check available variants and props, find usage examples, and understand component composition patterns before implementing each feature. Use Supabase MCP to verify queries return expected data shapes when debugging frontend data fetching issues. Set up Zustand for search filter state and TanStack Query for server state. Build the home page with an expandable search bar and featured listings grid. Create the search results page with a filterable listing grid alongside an interactive map with price markers. Build the listing detail page with image gallery, amenities, availability calendar, reviews section, and sticky booking widget with Stripe checkout. Create a multi-step new listing form for hosts. Add a dashboard with tabs for trips and hosted listings and favorites. Implement the host calendar view for managing availability and pricing. Ensure mobile responsiveness throughout with appropriate layout adaptations.”

Critical phrase: “using the existing backend”

This prompt explicitly builds on Prompt 2, which built on Prompt 1. The entire stack is referenced.

What this prompt specifies:

- UI framework: Shadcn UI with specific initialization

- State management: Zustand for filters, TanStack Query for server state

- Pages: home, search results with map, listing detail, multi-step form, dashboard, host calendar

- Features: image gallery, availability calendar, sticky booking widget, mobile responsiveness

- MCP usage: Shadcn for component APIs, Supabase for data shape verification

What this prompt does NOT specify:

- Component file structure

- CSS specifics

- Map implementation details

- Form validation logic

The Layered Architecture

Layer 3: Frontend (Prompt 3)

- Uses Shadcn MCP for component APIs

- Uses Supabase MCP to verify data shapes

- "using the existing backend"

|

v

Layer 2: Backend (Prompt 2)

- Uses Supabase MCP for schema reference

- Uses Supabase CLI for type generation

- "using the existing Supabase schema"

|

v

Layer 1: Database (Prompt 1)

- Uses Supabase CLI for migrations

- Uses Supabase MCP for verification

- Foundation for everything aboveEach layer explicitly references the layer below. This is not accidental---it tells AgentLoop exactly how the pieces connect.

The Task Decomposition

From these three prompts, AgentLoop’s product-manager agent generated 87 tasks:

Database Layer Tasks (from Prompt 1):

initialize-supabase-project

create-users-table-migration-extending-supabase-auth

create-listings-table-migration

create-listing-images-table-migration

create-amenities-table-and-junction-migration

create-availability-table-migration

create-bookings-table-migration

create-reviews-table-migration

create-favorites-table-migration

create-indexes-for-common-query-patterns

create-rls-policies-for-users-table

create-rls-policies-for-listings-table

...Backend Layer Tasks (from Prompt 2):

set-up-supabase-client-utilities

create-typescript-types-from-schema

create-zod-validation-schemas-for-listings

create-zod-validation-schemas-for-bookings

implement-listings-crud-server-actions

implement-booking-server-actions-with-availability

integrate-stripe-payment-intents-in-booking-flow

create-stripe-webhook-handler

implement-search-endpoint-with-filters

...Frontend Layer Tasks (from Prompt 3):

initialize-shadcn-ui

set-up-zustand-store-for-search-filters

set-up-tanstack-query-configuration

build-home-page-with-featured-listings-grid

build-expandable-search-bar-component

build-search-results-page-with-listing-grid

build-interactive-map-with-price-markers

build-listing-detail-page

build-image-gallery-component

build-multi-step-new-listing-form-step-1-property

build-booking-widget-with-stripe-checkout

build-user-dashboard-with-tabs

implement-mobile-responsiveness-forms-and-dashboard

...The ratio: 3 prompts became 87 tasks. That is 29x amplification of human intent into discrete, executable work items.

The MCP Context Advantage

Both prompts reference MCP tools. This is not decoration---it is how agents maintain context awareness across layers.

Supabase MCP provided:

- Schema inspection: Agents could query actual table structures

- Relationship verification: Agents confirmed foreign keys existed

- RLS testing: Agents tested policies with different user contexts

- Query validation: Agents verified queries returned expected shapes

Shadcn MCP provided:

- Component API lookup: Exact props, variants, and types

- Usage examples: How to compose components correctly

- Pattern verification: Ensuring components were used as intended

Example workflow:

- Database agent creates

listingstable with specific columns - Backend agent uses Supabase MCP to query the schema

- Backend agent generates TypeScript types matching actual columns

- Frontend agent uses Supabase MCP to verify data shape

- Frontend agent builds listing card component with correct types

- Shadcn MCP verifies Card component usage is correct

No guessing. No assumptions. Verified context at each step.

The Agent Pipeline

Every task ran through the same pipeline:

product-manager (decomposition)

|

v

engineer (implementation)

|

v

qa-tester (verification)Worker logs show this pattern repeating 87 times:

Worker starting task 728 as engineer...

Worker completed task 728 as engineer

Worker starting task 728 as qa-tester...

Worker completed task 728 as qa-testerThe qa-tester agent generated verification reports for each task. This automatic QA is what kept the codebase stable across 87 separate implementations.

The Timeline

- First commit: December 10, 2025 at 12:28 PM

- Last task completed: December 12, 2025 at 3:06 PM

- Total agent work time: 36 hours across 3 sprints

Each prompt kicked off a sprint. Multiple tasks ran in parallel where dependencies allowed.

The Memory System

As agents worked, they created 27 memory files:

database-schema-reference.mdbooking-widget-implementation-guide.mdcomprehensive-rls-testing-guide.mdstripe-webhook-implementation.mdsearch-api-pagination-bug-analysis.md

These are operational knowledge. When a qa-tester needed to verify the booking flow, it read the implementation guide. When an engineer needed to extend the search API, it referenced the pagination analysis.

Agents teaching agents. Institutional memory built automatically.

The User Experience

The user provided:

- Three detailed prompts describing each layer

- Environment variables (Supabase URL, keys, Stripe keys)

- Answers to clarifying questions

- Browser testing and feedback

The user did NOT provide:

- SQL schemas

- File structures

- Component implementations

- Error handling code

- Individual bug fixes

When issues surfaced, prompts remained high-level:

“The search page is showing 0 stays.”

AgentLoop traced this to an RLS policy issue, diagnosed the specific condition failing, and fixed it.

“Why isn’t the Reserve button working?”

AgentLoop investigated the booking flow, found a date validation bug, fixed it, then discovered missing Stripe environment variables. It reported both issues.

The Human Intervention: Calendar Expansion Bug

While AgentLoop worked autonomously on 87 tasks, there were moments where human feedback accelerated problem resolution. One notable example: the calendar popover issue.

The Symptom:

When clicking the “Check in / Check out” button, the calendar popover refused to expand. The button clicked, but nothing happened. The search bar collapsed instead of opening the date picker.

What the User Reported:

“The search page is showing 0 stays.”

“Why isn’t the Reserve button working?”

What AgentLoop Diagnosed:

The investigation revealed two interconnected issues that the agents documented in their memory files:

Issue 1: Parent onBlur Race Condition

The search bar’s parent container had an onBlur handler that was firing before the Radix Popover could open:

Click Event Timeline:

t=0ms: User clicks button

t=1ms: Focus shifts to button

t=2ms: Parent onBlur fires

t=3ms: handleClickOutside checks: datePopoverOpen = false (still!)

t=4ms: setIsExpanded(false) is called

t=5ms: Component re-renders, SearchBar collapses

t=6ms: Radix's click handler would fire — too lateThe check if (!datePopoverOpen && !guestPopoverOpen) happened before the popover state could update, creating a race condition that collapsed the search bar before the calendar could appear.

Issue 2: Uncontrolled Component State Loss

Even after fixing the popover opening, the date range selection had a secondary bug: clicking the first date, then the second date, would reset the selection instead of completing the range.

Root cause: The AvailabilityCalendar was an uncontrolled component with internal state. When the popover closed and reopened, that state was lost. The calendar forgot the first selected date.

The Fix:

AgentLoop implemented a multi-part solution:

- Added

setTimeout(..., 0)to theonBlurhandler, deferring the collapse check to the next event loop tick - Converted AvailabilityCalendar from uncontrolled to controlled, accepting a

selectedRangeprop from the parent - Added

onInteractOutsidehandlers to Radix popovers for proper click-outside behavior

The Pattern:

This illustrates how human feedback worked: the user described symptoms (“calendar won’t expand”, “0 stays showing”), and AgentLoop traced the symptoms to root causes, documented the analysis in memory files, and implemented fixes.

The searchbar-popover-onblur-interference-analysis.md memory file runs 417 lines---documenting event timing, React synthetic event behavior, Radix portal mechanics, and five potential solutions with trade-offs. This is the kind of systematic debugging that agents do automatically.

The Results

Database Layer (from Prompt 1):

- 13 migrations with proper sequencing

- 30+ indexes (composite, partial, GIST, GIN)

- RLS policies on all tables

- Database functions for availability, totals, ratings

- Storage buckets for images

Backend Layer (from Prompt 2):

- Type-safe server actions with Zod validation

- Stripe PaymentIntent integration with webhooks

- OAuth authentication (Google, GitHub)

- Search API with full filtering

- Comprehensive error handling

Frontend Layer (from Prompt 3):

- Next.js 14 with App Router

- Shadcn UI components throughout

- Interactive maps with marker clustering

- Multi-step listing creation

- Booking flow with Stripe checkout

- User and host dashboards

- Mobile responsiveness

Key Takeaways

Layered prompts work. Describing each layer explicitly, with each referencing the previous, created clear architecture without detailed specifications.

MCP tools provide context. Agents did not generate code blind---they verified schemas, tested policies, and looked up APIs.

3 prompts became 87 tasks. The amplification ratio (29x) shows how structured prompts scale into coordinated work.

Automatic QA scales. 87 tasks, each verified before merge. Quality enforced by automation.

2.5 days is real. From first commit to deployment. Three prompts. Complete full-stack application.

The Prompts Were Detailed But High-Level

This distinction matters. The prompts specified:

- What tables to create (not SQL syntax)

- What features to build (not implementation patterns)

- What tools to use (not how to configure them)

- What each layer should do (not file-by-file specifications)

The prompts were detailed in scope but abstract in implementation. They described the shape of the application without prescribing the code.

This is the key insight: structured, layered descriptions of what you want, combined with context-aware agents that can verify their work, produce full-stack software.

Try It Yourself

The Airbnb clone built in this case study is a complete full-stack application. The code demonstrates patterns for authentication, payments, search, booking, reviews, and more---all generated from three prompts and verified by automatic QA.

Get Started with AgentLoop | View the Live Demo

Built with AgentLoop orchestrating product-manager, engineer, and qa-tester agents. Three layered prompts. 87 tasks. MCP context awareness. 2.5 days to a full-stack app.